By: Levi Masonde | Updated: 2024-10-01 | Comments | Related: More > Artificial Intelligence

Problem

You have started working on AI applications and went through the basics - understanding concepts and writing code that interacts with large language models (LLM). But, in most cases, you will see tutorials that serve as introductory articles, which do not help you create well-rounded deployable applications. In this article, we look at how to add message history and a user interface for an LLM application.

Solution

Adding message history and a user interface (UI) to your LLM application can bring it to life as you get closer to completing an LLM application for deployment.

In this tutorial, you will learn how to create a message history and a UI for a LangChain chatbot application. This tutorial uses concepts introduced in a previous article and further explores others, like how AI can utilize local memory.

Prerequisites

- Visual Studio Code

- Python

- LangChain

Prompt Template

A prompt template is a set of instructions or input from a user to guide a model's response. This gives the model context for any given task or question. You can use different prompt template techniques for your model, including zero-shot or few-shots prompts. You can read more about these techniques here.

This tip will use the ChatPromptTemplate, which helps to make prompts for chat models that create a list of chat messages. These chat messages carry an additional parameter named role. The role can be of a human, AI, or system.

This is the prompt code for this article:

# Define the prompt template

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You're an assistant who speaks in {language}. Respond in 20 words or more",

),

MessagesPlaceholder(variable_name="history"),

("human", "{input}"),

]

)

As you can see from the code above, we are prompting the system role and telling it to expect input from the user.

Runnables Interface

Runnables are a unit of work that can be invoked, batched, streamed, transformed, and composed. The runnable interface makes it easy to create custom chain protocols. Here, you will create a runnable with an LLM model and the prompt template we created in the previous section.

LangChain Runnables interface can be invoked using the following functions:

- Stream: Stream back chunks of data.

- Invoke: Call the chain on an input.

- Batch: Call the chain on a list of inputs.

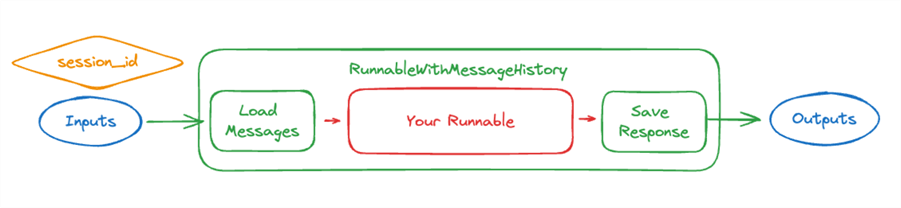

This tutorial uses the RunnableWithMessageHistory class, which adds a message history to your chain. This class loads messages before it passes them through the Runnable and then saves the AI response after the Runnable is called. The RunnableWithMessageHistory class also has a session_id parameter to create multiple conversions:

image source: https://python.langchain.com/v0.1/docs/integrations/chat/

The get_session_history function needs to be passed to the RunnableWithMessageHistory class. This function takes the session_id as the parameter and returns a BaseChatMessageHistory object. The BaseChatMessageHistory object is responsible for loading and saving the message object. In this tutorial, you will use the SQLChatMessageHistory to return a message history object that uses SQLite as the storage memory.

Creating the Web User Interface

LangChain can be integrated with a Web Server Gateway Interface (WSGI), like Flask, to create web applications using Python. Now, to create your Flask application, create a new file named App.py and add the following code:

#MSSQLTips Code

import os

from flask import Flask, request, jsonify, render_template

from langchain_cohere import ChatCohere

from langchain_core.runnables.history import RunnableWithMessageHistory

from langchain_community.chat_message_histories import SQLChatMessageHistory

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langchain_core.runnables import ConfigurableFieldSpec

# Initialize Flask app

app = Flask(__name__)

# Retrieve environment variables

cohere_api_key = os.getenv('COHERE_API_KEY')

if not cohere_api_key:

raise ValueError("COHERE_API_KEY environment variable not found")

# Initialize the ChatCohere model

model = ChatCohere(model="command-r", cohere_api_key=cohere_api_key)

# Define the prompt template

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"You're an assistant who speaks in {language}. Respond in 20 words or more",

),

MessagesPlaceholder(variable_name="history"),

("human", "{input}"),

]

)

runnable = prompt | model

# Function to get session history

def get_session_history(user_id: str, conversation_id: str):

return SQLChatMessageHistory(f"{user_id}--{conversation_id}", "sqlite:///memory.db")

with_message_history = RunnableWithMessageHistory(

runnable,

get_session_history,

input_messages_key="input",

history_messages_key="history",

history_factory_config=[

ConfigurableFieldSpec(

id="user_id",

annotation=str,

name="User ID",

description="Unique identifier for the user.",

default="",

is_shared=True,

),

ConfigurableFieldSpec(

id="conversation_id",

annotation=str,

name="Conversation ID",

description="Unique identifier for the conversation.",

default="",

is_shared=True,

),

],

)

@app.route('/')

def index():

return render_template('index.html')

@app.route('/chat', methods=['POST'])

def chat():

data = request.json

language = data.get("language", "english")

input_text = data.get("input")

user_id = data.get("user_id")

conversation_id = data.get("conversation_id")

if not input_text or not user_id or not conversation_id:

return jsonify({"error": "Missing input, user_id, or conversation_id"}), 400

config = {"configurable": {"user_id": user_id, "conversation_id": conversation_id}}

response = with_message_history.invoke({"language": language, "input": input_text}, config=config)

return jsonify({"response": response.content})

@app.route('/history/<user_id>/<conversation_id>', methods=['GET'])

def get_history(user_id, conversation_id):

session_history = get_session_history(user_id, conversation_id)

messages = [{"type": message.__class__.__name__, "content": message.content} for message in session_history.messages]

return jsonify({"history": messages})

# Run the Flask app

if __name__ == '__main__':

app.run(debug=True)

Results

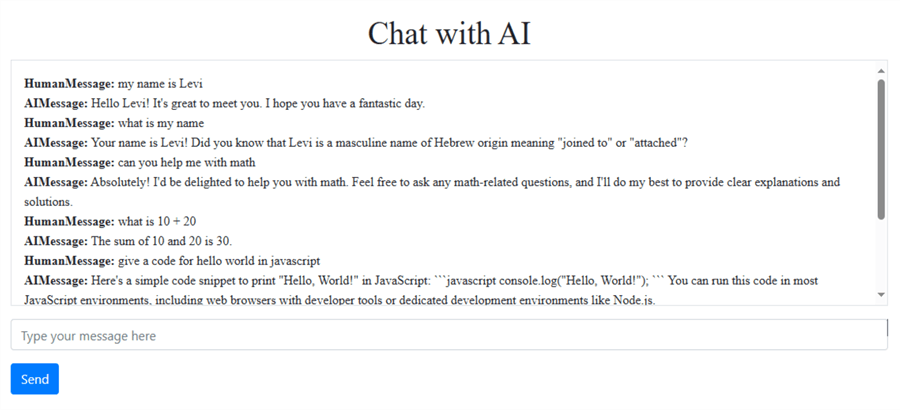

This is how the application looks:

Conclusion

Creating LLM applications will only get more popular and easier with time. However, it is important to understand the concepts behind the technology. This tutorial shows how an LLM AI can have a "memory" when you engage with it using LangChain classes.

Next Steps

- Learn how to use LangChain Prompt Templates with OpenAI LLMs.

- You can also learn more about how LLMs work.

- Learn how to Create AI Models with T-SQL to Buy or Sell Financial Securities.

- Learn more about Artificial Intelligence Features in Power BI for Report Development.

- Learn how they use Large Language Models (LLMs) to train artificial intelligence (AI) tools such as ChatGPT.

About the author

Levi Masonde is a developer passionate about analyzing large datasets and creating useful information from these data. He is proficient in Python, ReactJS, and Power Platform applications. He is responsible for creating applications and managing databases as well as a lifetime student of programming and enjoys learning new technologies and how to utilize and share what he learns.

Levi Masonde is a developer passionate about analyzing large datasets and creating useful information from these data. He is proficient in Python, ReactJS, and Power Platform applications. He is responsible for creating applications and managing databases as well as a lifetime student of programming and enjoys learning new technologies and how to utilize and share what he learns.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2024-10-01