By: Rick Dobson | Updated: 2024-05-10 | Comments | Related: 1 | 2 | 3 | More > Import and Export

Problem

I want my team of SQL Server professionals to improve the efficiency of their file import projects through the application of the bulk insert statement. Please present multiple use case examples for the bulk insert statement so I can motivate them to apply it more regularly to the file import projects we are required to run.

Solution

The T-SQL bulk insert statement is specifically designed to input the contents of large files into SQL Server tables. However, bulk insert statements can be readily adapted for importing both small files as well as large files and/or many medium-sized files. If you enjoy programming in T-SQL or conclude that SSIS is overkill for some of your file import projects, bulk insert statements may offer the right level of support and give you a performance benefit.

This tip presents three progressively more advanced bulk insert use case examples. The download includes sample data for each use case example and additional data samples to practice with fresh data.

- Use Case #1: Illustrates the basics of invoking a bulk insert statement for importing a TXT file and parsing selected elements of the imported file into a SQL Server results set.

- Use Case #2: Focuses on how to write T-SQL code to import each of three files into a single SQL Server table. Each file uses its filename to indicate the category for the type of data in a file.

- Use Case #3: Demonstrates how to make bulk insert statements dynamic through the SQL Server replace function and a stored procedure to import a set of files designated by their names into a SQL Server table.

Use Case #1: Importing a TXT File into a SQL Server Results Set

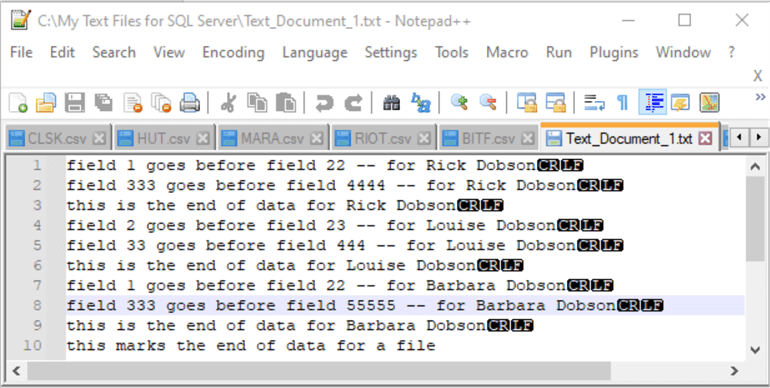

The following screenshot displays the contents of an arbitrary TXT file created to demonstrate the first use case example. The file appears in a Notepad++ session. The header for the session indicates the file resides on the C drive in the My Text Files for SQL Server folder. The filename is Text_Document_1.txt. The filename and path to the file are important because they are required by the FROM clause of a bulk insert statement.

The file contents appear in 10 lines within the Notepad++ session. The CR and LF at the end of the first nine lines are row terminators. These characters correspond to default row terminators for the bulk insert statement. The last line in a file does not require a row terminator.

Notice that the data inside the Notepad++ tab for the Text_Document_1.txt file contains free-form text – that is, the data are not organized or delimited into rows and columns. The data are for three persons named Rick Dobson, Louise Dobson, and Barbara Dobson. The data for each person appears on two successive lines. After the second line for each person, a third line indicates the end of data for a person. Another line type is the last line in the TXT file. This line marks the end of the file.

The following script illustrates the T-SQL code for the first use case.

- The two statements in the comment block at the top of the script create a fresh copy of the for_char_data_lines database.

- The next several lines after the comment block designate the for_char_data_lines database as the default database for the rest of the script and create a fresh copy of the char_data_lines table in the dbo schema of the database.

- The bulk insert statement after the create table statement for the char_data_lines table pushes the lines from the Text_Document_1.txt file to the char_data_lines table.

- The code after the bulk insert statement parses three sets of values from the rows in the char_data_lines table. A version of this kind of parsing code will typically appear in scripts that import free-form text data.

/*

-- run once from a fresh SQL Server session

-- before invoking the uncommented code in this script

drop database if exists for_char_data_lines;

create database for_char_data_lines;

*/

-- create a fresh version of dbo.char_data_lines table

-- in for_char_data_lines database

use for_char_data_lines;

drop table if exists char_data_lines;

create table dbo.char_data_lines (

line nvarchar(80)

);

-- bulk insert C:\My Text Files for SQL Server\Text_Document_1.txt

-- to the dbo.char_data_lines table

-- in the for_char_data_lines database

bulk insert dbo.char_data_lines

from 'C:\My Text Files for SQL Server\Text_Document_1.txt';

-- optionally display unparsed lines from dbo.char_data_lines tables

-- select * from dbo.char_data_lines

-- parse first and second field values

-- along with full name from each line

select line

,substring(

line

,charindex(' ',line)+1

,(charindex(' ',line,charindex(' ',line)+1)-charindex(' ',line))-1) [first field number]

,substring(line

,(charindex('goes before field',line) + len('goes before field') +1)

,((charindex(' ',line,(charindex('goes before field',line) + len('goes before field') +1)))

-

(charindex('goes before field',line) + len('goes before field') +1))) [second field number]

,substring(line, charindex(' for ',line)+5,99) [full name]

from dbo.char_data_lines

where not(left(line, 15) = 'this is the end' or left(line, 18) = 'this marks the end')

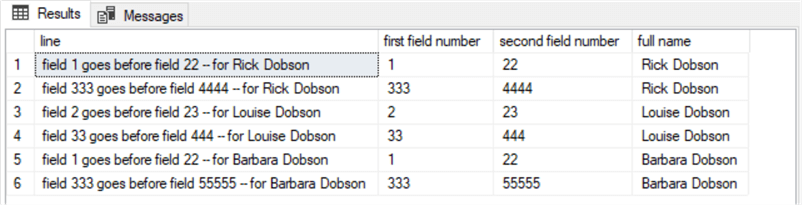

A good approach to learning about the role and operation of the parsing code is to view the results set from the select statement after the bulk insert statement. The following excerpt is from the Results tab in SQL Server Management Studio after running the preceding script.

- There are only six rows in the results set from the script. These rows correspond to the two lines for each person in the TXT file referenced by the from clause within the bulk insert statement. The transformation of the line values in the char_data_lines table rows is implemented with four SQL Server string functions (substring, charindex, len, and left).

- The where clause at the end of the select statement excludes lines marking the end of data for each person and the overall source file referenced by the bulk insert statement.

- Three fields are extracted from each line:

- The number following the first instance of the word field on a line.

- The number following the second instance of the word field on a line.

- The full name of the person on each of the two lines for each person.

Use Case #2: Import and Categorize Three CSV Files into a SQL Server Table

The second case study example imports three CSV files with bulk insert statements into a SQL Server table. All three files have the same layout for their content. The file rows are unique by stock ticker and date. The columns for each row contain different kinds of prices as well as the number of shares traded during a day. This general kind of data layout is common in many business applications, such as tracking salaries, commissions, and bonuses earned by salespersons from different offices or the number and dollar amount of orders input through various pages on a website.

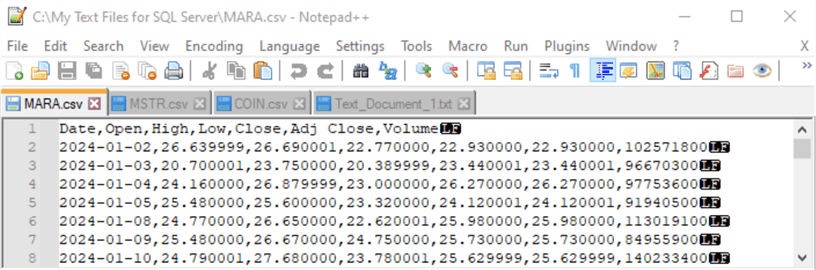

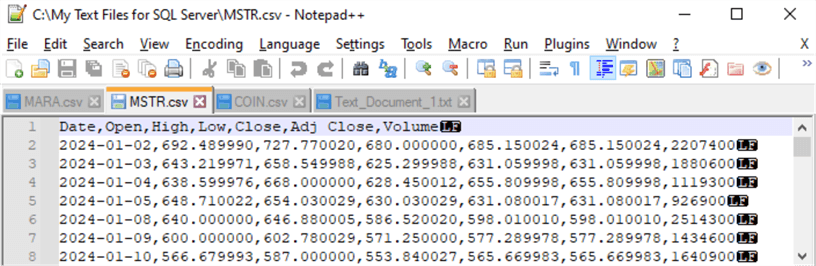

The following table shows an excerpt from each of the three files. The filenames are MARA.csv, MSTR.csv, and COIN.csv. The files reside in the same Windows drive and folder (C:\My Text Files for SQL Server) as the preceding use case example.

- The top table cell shows the first eight rows from MARA.csv. This excerpt displays the column headers (Date, …, Volume) followed by date and numeric values.

- The middle table cell shows the first eight rows from MSTR.csv. As you can see, the layout of its data is the same as the MARA.csv file. Also, the date values are the same in the top and middle table cells (starting at 2024-01-02 and ending at 2024-01-10).

- The bottom table cell shows the last eight rows from COIN.csv. The data layout for this table cell matches the preceding two table cells, except that it does not show column headers. Column headers may optionally be included in CSV files. In this use case example, all three CSV files have column headers in their first file row. Also, all three CSV files have the same ending date (2024-03-28), which appears as the first field value in the bottom row of the bottom table cell.

The following script shows the T-SQL code for importing the three CSV files into a SQL Server table. There are four segments to the script that are separated from one another by a row of single-line comment markers.

- The first segment starts by designating the for_char_data_lines database

as the default one for the script. This segment also creates fresh versions

of two tables.

- SQL Server Table 1: Stores the original contents of each of the three CSV files in the dbo.imported_data_for_a_category table. This table is freshly populated for each of the three CSV files. The columns of this table correspond to the table excerpts that the preceding table displays.

- SQL Server Table 2 (dbo.imported_data_across_categories): Stores data from all three CSV files. The first column in the table has the name symbol. This column stores the ticker symbol for each CSV file. In other words, the symbol column categorizes the rows from each CSV file.

- The second segment has three steps.

- Step 1: Copies the

contents of the MARA.csv file to the dbo.imported_data_for_a_category

table with a bulk insert statement that relies on four parameter settings:

- Format is for the type of character data being imported – namely, character data from a CSV file,

- firstrow designates the beginning data row in the source file,

- fieldterminator designates the delimiter character (,) for field values,

- rowterminator specifies the character (0x0a) to mark the end of a row; another commonly used rowterminator value is (\n\r)

- Step 2: Copies (using an insert into bulk statement) the contents of the dbo.imported_data_for_a_category table to the dbo.imported_data_across_categories table. This step also populates the symbol column of the dbo.imported_data_across_categories table with mara, which is the name of the source CSV file for the segment.

- Step 3: Echoes the contents of the dbo.imported_data_across_categories table into the Results tab within SQL Server Management Studio.

- Step 1: Copies the

contents of the MARA.csv file to the dbo.imported_data_for_a_category

table with a bulk insert statement that relies on four parameter settings:

- With a couple of exceptions, the third and fourth segments are identical

to the second segment.

- Both of these segments begin with a truncate table statement for the dbo.imported_data_for_a_category table. The truncate table statement is required to clear any prior data from the table before running the bulk insert statement to copy fresh data from another CSV file. The second segment does not require a truncate table statement because the dbo.imported_data_for_a_category table was not previously populated.

- The third and fourth segments populate the symbol column in the dbo.imported_data_across_categories

table with a different value (mara) than in the second segment.

- In the third segment, the string value assigned to the symbol column is mstr.

- In the fourth segment, the string value assigned to the symbol column is coin.

use for_char_data_lines;

-- create fresh versions of the

-- dbo.imported_data_for_a_category and

-- the dbo.imported_data_across_categories tables

drop table if exists dbo.imported_data_for_a_category;

create table dbo.imported_data_for_a_category (

[Date] date

,[Open] dec(21,6)

,[High] dec(21,6)

,[Low] dec(21,6)

,[Close] dec(21,6)

,[Adj Close] dec(21,6)

,[Volume] dec(19,0)

);

drop table if exists dbo.imported_data_across_categories;

create table dbo.imported_data_across_categories (

[Symbol] nvarchar(10)

,[Date] date

,[Open] dec(21,6)

,[High] dec(21,6)

,[Low] dec(21,6)

,[Close] dec(21,6)

,[Adj Close] dec(21,6)

,[Volume] dec(19,0)

);

----------------------------------------------------------

-- bulk insert file to imported_data_for_a_category

bulk insert dbo.imported_data_for_a_category

from 'C:\My Text Files for SQL Server\MARA.csv'

with (

format = 'CSV'

,firstrow = 2

,fieldterminator = ','

,rowterminator = '0x0a')

-- optionally display dbo.imported_data_for_a_category

-- select * from dbo.imported_data_for_a_category

-- insert imported_data_for_a_category

-- into dbo.imported_data_across_categories

-- with category id as symbol column value

insert into dbo.imported_data_across_categories

select 'mara', * from dbo.imported_data_for_a_category

-- optionally display dbo.imported_data_across_categories

select * from dbo.imported_data_across_categories

----------------------------------------------------------

-- erase contents of dbo.imported_data_for_a_category;

truncate table dbo.imported_data_for_a_category;

-- bulk insert file to imported_data_for_a_category

bulk insert dbo.imported_data_for_a_category

from 'C:\My Text Files for SQL Server\MSTR.csv'

with (

format = 'CSV'

,firstrow = 2

,fieldterminator = ','

,rowterminator = '0x0a')

-- optionally display dbo.imported_data_for_a_category

-- select * from dbo.imported_data_for_a_category

-- insert imported_data_for_a_category

-- into dbo.imported_data_across_categories

-- with category id as symbol column value

insert into dbo.imported_data_across_categories

select 'mstr', * from dbo.imported_data_for_a_category

-- optionally display dbo.imported_data_across_categories

select * from dbo.imported_data_across_categories

----------------------------------------------------------

-- erase contents of dbo.imported_data_for_a_category;

truncate table dbo.imported_data_for_a_category;

-- bulk insert file to imported_data_for_a_category

bulk insert dbo.imported_data_for_a_category

from 'C:\My Text Files for SQL Server\COIN.csv'

with (

format = 'CSV'

,firstrow = 2

,fieldterminator = ','

,rowterminator = '0x0a')

-- optionally display dbo.imported_data_for_a_category

-- select * from dbo.imported_data_for_a_category

-- insert imported_data_for_a_category

-- into dbo.imported_data_across_categories

-- with category id as symbol column value

insert into dbo.imported_data_across_categories

select 'coin', * from dbo.imported_data_for_a_category

-- optionally display dbo.imported_data_across_categories

select * from dbo.imported_data_across_categories

----------------------------------------------------------

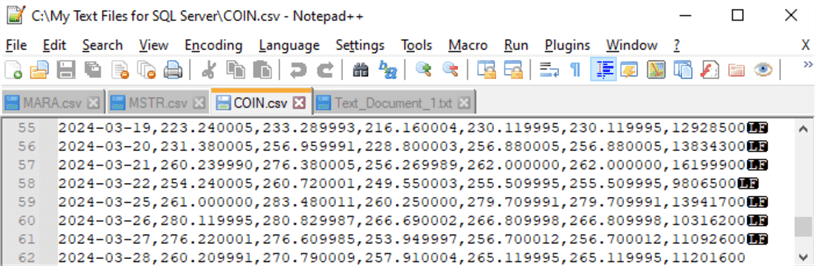

The following screenshot shows three excerpts that are, respectively, from the last select statements at the end of the second, third, and fourth segments in the preceding script.

- Top Excerpt: The first eight data rows for the mara symbol.

- Middle Excerpt: The first eight data rows for the mstr symbol.

- Bottom Excerpt: The bottom eight data rows for the coin symbol.

Use Case #3: Using Dynamic SQL and a Stored Procedure to Import CSV Files

The script in the preceding section illustrates how to import multiple CSV files into a SQL Server table with the help of the bulk insert statement. Unfortunately, the bulk insert statement requires a literal value for each source file to be imported. If you only have a few files to import, it will not be a significant issue to update a few filenames. However, as the number of new files to import grows, these manual updates can introduce errors in the script for importing the files.

This section describes a new script to remedy this issue involving dynamic SQL embedded in a stored procedure. The stored procedure accepts an input parameter with the name of the file to be imported. The T-SQL inside the stored procedure replaces a portion of the SQL that invokes the bulk insert statement and populates a target table with values from a source file. Therefore, you can easily run the solution in this section by specifying the stored procedure’s input parameter for each source filename.

There are three segments to the script that are separated by a row of single-line comment markers.

- The first segment creates two tables—one for accepting a single CSV file and a second for saving the imported contents, with a new column for each CSV filename.

- The second segment creates a stored procedure with a bulk insert statement specified as a SQL string. This segment also updates the SQL string with the name of the file to be imported and then populates the first table for accepting file contents. Next, the stored procedure code populates the second table containing the filename with its imported contents. The final operation in the stored procedure truncates the first table so that an empty table is available for any re-run of the stored procedure with a new CSV filename.

- The third segment shows how to run the script created in the second segment

to populate the two files created in the first segment. There are just

four lines of code in the third segment:

- Lines 1-3: Invoke the stored procedure for each of the three CSV file names.

- Line 4: Displays the contents of the second table with values from all source files, which are MARA.csv, MSTR.csv, and COIN.csv, in the following code sample.

Here is the script described above. Notice that the first and second segments operate only once. After each segment runs once, you can re-run the stored procedure for as many files as you must import. Each file imported to SQL Server must reside in the My Text Files for SQL Server folder of the C drive.

use for_char_data_lines;

-- create fresh versions of the

-- dbo.imported_data_for_a_category

-- and dbo.imported_data_across_categories

drop table if exists dbo.imported_data_for_a_category;

create table dbo.imported_data_for_a_category (

[Date] date

,[Open] dec(21,6)

,[High] dec(21,6)

,[Low] dec(21,6)

,[Close] dec(21,6)

,[Adj Close] dec(21,6)

,[Volume] dec(19,0)

);

drop table if exists dbo.imported_data_across_categories;

create table dbo.imported_data_across_categories (

[Symbol] nvarchar(10)

,[Date] date

,[Open] dec(21,6)

,[High] dec(21,6)

,[Low] dec(21,6)

,[Close] dec(21,6)

,[Adj Close] dec(21,6)

,[Volume] dec(19,0)

);

----------------------------------------------------------

-- create stored proc to populate @filename and

-- dbo.imported_data_for_a_category

-- populate dbo.imported_data_across_categories

-- based on @filename and dbo.imported_data_for_a_category

drop procedure if exists dbo.add_rows_to_table_from_a_file;

go

-- add code to start stored proc here

create procedure dbo.add_rows_to_table_from_a_file

(@infilename nvarchar(10))

as

begin

declare

@sql nvarchar(max)

,@sql_b4replace nvarchar(max)

,@path nvarchar (100)

,@filename nvarchar(10)

-- assign @infilename parameter value

-- to @filename local variable

set @filename = @infilename

-- dynamic sql base code for the stored procedure

set @sql_b4replace = '

bulk insert dbo.imported_data_for_a_category

from ''C:\My Text Files for SQL Server\csv_file_name.csv''

with (

format = ''CSV''

,firstrow = 2

,fieldterminator = '',''

,rowterminator = ''0x0a'')

'

-- invoke replace function for @sql_b4replace

-- to set @sql for a new source file

set @sql = replace(@sql_b4replace,'csv_file_name',@filename)

-- execute @sql for current value of @filename

exec (@sql)

-- insert imported_data_for_a_category

-- into dbo.imported_data_across_categories

-- with category id as symbol column value

insert into dbo.imported_data_across_categories

select @filename, * from dbo.imported_data_for_a_category

-- truncate dbo.imported_data_for_a_category

-- for the next category if there is one

truncate table dbo.imported_data_for_a_category

end

go

----------------------------------------------------------

-- successively invoke the stored procedure

-- for each filename

exec dbo.add_rows_to_table_from_a_file 'mara';

exec dbo.add_rows_to_table_from_a_file 'mstr';

exec dbo.add_rows_to_table_from_a_file 'coin';

-- optionally display dbo.imported_data_across_categories

select * from dbo.imported_data_across_categories

Since the instructions for running the code in this section are for the same files as the preceding section, you will get the same Results tab output at the end of the prior section.

Next Steps

This tip introduces the basics of using the bulk insert statement with three different use case examples. I regularly use the CSV file examples in this tip to download financial data from the Yahoo Finance site to SQL Server tables. This code can also be adapted for many other kinds of data, such as human resources data about company employees or orders coming from user responses to a web page.

Download the files for this article.

There are at least three next steps.

- Run the use case examples as described in this tip. You can scrape the code for the use case examples. You can find the source data files for use case examples in the download for this tip.

- The download includes two additional CSV files (spy.csv and qqq.csv) not referenced in this tip. You can run the third use case example with these additional files to verify your ability to adapt and run the code with fresh data.

- Lastly, you can adapt any use case examples with appropriate data from your business.

About the author

Rick Dobson is an author and an individual trader. He is also a SQL Server professional with decades of T-SQL experience that includes authoring books, running a national seminar practice, working for businesses on finance and healthcare development projects, and serving as a regular contributor to MSSQLTips.com. He has been growing his Python skills for more than the past half decade -- especially for data visualization and ETL tasks with JSON and CSV files. His most recent professional passions include financial time series data and analyses, AI models, and statistics. He believes the proper application of these skills can help traders and investors to make more profitable decisions.

Rick Dobson is an author and an individual trader. He is also a SQL Server professional with decades of T-SQL experience that includes authoring books, running a national seminar practice, working for businesses on finance and healthcare development projects, and serving as a regular contributor to MSSQLTips.com. He has been growing his Python skills for more than the past half decade -- especially for data visualization and ETL tasks with JSON and CSV files. His most recent professional passions include financial time series data and analyses, AI models, and statistics. He believes the proper application of these skills can help traders and investors to make more profitable decisions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2024-05-10