By: Tal Olier | Updated: 2008-05-16 | Comments (2) | Related: > Query Optimization

Problem

As far as data management and RDBMS rules are concerned, relational databases are the most adoptable way for maintaining data with a reasonable level of concurrency and while supporting data management activities such as backups, mass purges, changing data structures, etc.

One problem is the difference in programming languages in traditional applications. The SQL (Structured Query Language) language is a declarative language that became the "Data Language" used for describing "what I need" and "where to fetch it from" in most organizations. OOP (Object Oriented Programming) languages became the most common practice among developers widely adopted by R&D (Research and Development) organizations around the world. So how do we bridge the gap?

Solution

Those two trends created a need for a "bridge" that will cover the gap by translating request from object oriented programming languages to SQL. In most cases the DAL (Data Access Layer) is used to describe the mechanism (mainly proprietary) built to manage all this "data bridging task" in a centralized way.

Since database vendors (Microsoft, Oracle, IBM, etc.) offer a very large set of proprietary commands in their specific flavor of in SQL, translation in the DAL has to support many options. What ends up happening is the implementation details sometimes lose many of the performance optimizations built into the engine. This has been causing many of these DAL's to be implemented in a very straight forward way, that being break down the request to smaller pieces translating each to it's equivalent SQL statements and building the "SELECT... FROM... WHERE..." clause that will figuratively do the job.

Over the years I have been observing some DAL's design and implementation and from my experience a "machine written SQL statement" sometimes tend to be very long text statements. Just the other day the question popped up "What is the maximum text length of queries should we aim for?", so I checked the Maximum Capacity Specifications for SQL Server 2005 and found that the length of a string containing SQL statements (i.e. batch size) for both 32 and 64 bit systems is defined as 65,536 * Network packet size. The default network packet size is 4096, so the SQL statement text is limited for 256 MB.

I suspected that long text queries (with much less than 256 MB) will create some challenge for the server's CPU. So I thought this should be tested and published in this tip. So for the scope of this tip we are trying to address these items:

- Proving that long text queries will consume your CPU

- Give some sense regarding the actual penalty expected on a medium sized server

- Dual core CPU with 2GB of RAM and 4 x 10,000 RPM disks

Test Table Characteristics

For my test I am going to create a table (called t1000) with 200,000 records. This table has many different data types because I think it is a reasonable representation of many production tables work with on a regular basis. The characteristics of this table include:

- A single integer column as a primary key (clustered by default).

- A varchar column.

- A char column simulating additional 1 KB of data.

- Five integer columns that will be used for creating the long text query's in the WHERE clause.

Script: Create Test Table

| create table t1000 ( c1 int not null constraint test_pk primary key, c2 varchar(10) not null, c3 char(1000), c4 int not null, c5 int not null, c6 int not null, c7 int not null, c8 int not null ) go |

Script: Populate Test Table

| set nocount on declare @i as int set @i = 0 while @i<200000 begin set @i = @i + 1 insert into t1000 (c1, c2, c3, c4, c5, c6, c7, c8) values (@i, cast (@i as varchar (10)), '...simulating additional 1k data...', @i, @i, @i, @i, @i) end set nocount off go |

Script: Creating Test Queries

Since I am planning to test some very long queries, I will produce them in an automatic way. My first thought was to just print some long text to the screen and then paste it to a new SQL Server Management Studio's query window. What I found out was that long queries (hundreds of KB) are a bit heavy for the Management Studio (especially when word wrap is on) so I turned to the next best thing - files.

Writing text files can be done in various programming languages but since we deal with SQL Server I'll demonstrate a T-SQL way I learned from Reading and Writing Files in SQL Server using T-SQL - a great article by Phil Factor. I will use the following stored procedure, originally published by Phil here with a few tweaks for my needs.

| create PROCEDURE spWriteStringToFile (@String Varchar(max), --8000 in SQL Server 2000 @Path VARCHAR(255), @Filename VARCHAR(100) ) AS DECLARE @objFileSystem int ,@objTextStream int, @objErrorObject int, @strErrorMessage Varchar(1000), @Command varchar(1000), @hr int, @fileAndPath varchar(80) set nocount on select @strErrorMessage='opening the File System Object' EXECUTE @hr = sp_OACreate 'Scripting.FileSystemObject' , @objFileSystem OUT Select @FileAndPath=@path+'\'+@filename if @HR=0 Select @objErrorObject=@objFileSystem , @strErrorMessage='Creating file "'+@FileAndPath+'"' if @HR=0 execute @hr = sp_OAMethod @objFileSystem , 'CreateTextFile' , @objTextStream OUT, @FileAndPath,2,True if @HR=0 Select @objErrorObject=@objTextStream, @strErrorMessage='writing to the file "'+@FileAndPath+'"' if @HR=0 execute @hr = sp_OAMethod @objTextStream, 'Write', Null, @String if @HR=0 Select @objErrorObject=@objTextStream, @strErrorMessage='closing the file "'+@FileAndPath+'"' if @HR=0 execute @hr = sp_OAMethod @objTextStream, 'Close' if @hr<>0 begin Declare @Source varchar(255), @Description Varchar(255), @Helpfile Varchar(255), @HelpID int EXECUTE sp_OAGetErrorInfo @objErrorObject, @source output,@Description output,@Helpfile output,@HelpID output Select @strErrorMessage='Error whilst ' +coalesce(@strErrorMessage,'doing something') +', '+coalesce(@Description,'') raiserror (@strErrorMessage,16,1) end EXECUTE sp_OADestroy @objTextStream EXECUTE sp_OADestroy @objTextStream GO |

Script: Enable OLE Automation

Since the stored procedure above uses OLE Automation you will need to enable it on your SQL Server because this option is turned off by default due to security concerns. It is still fun playing with it :). Turning on the Ole Automation is done with the following command:

| EXEC sp_configure 'Ole Automation Procedures', 1 RECONFIGURE WITH OVERRIDE GO |

In case your server does not have Ole Automation enabled, trying to run the above stored procedure will produce the following error:

| Msg 15281, Level 16, State 1, Procedure sp_OACreate, Line 1 SQL Server blocked access to procedure 'sys.sp_OACreate' of component 'Ole Automation Procedures' because this component is turned off as part of the security configuration for this server. A system administrator can enable the use of 'Ole Automation Procedures' by using sp_configure. For more information about enabling 'Ole Automation Procedures', see "Surface Area Configuration" in SQL Server Books Online. |

Script: What SQL statement should I use for my test?

I want to write a simple query that will return only one row but will have a very long WHERE clause. The following is an example query:

| select top 1 c1 from t1000 where c1 > 0 or (c5 = 1) or (c6 = 2) or (c7 = 3) or (c8 = 4) or (c4 = 5) or (c5 = 6) or (c6 = 7) or (c7 = 8) or (c8 = 9) or (c4 = 10) GO |

All that is left is to create a T-SQL block that will create a query similar to the one above:

| /* 01 */ set nocount on /* 02 */ declare @i as int /* 03 */ declare @sql_stmt as varchar(max) /* 04 */ declare @num_of_ors as int /* 05 */ set @num_of_ors = 5000 /* 06 */ set @i = 0 /* 07 */ set @sql_stmt = 'select top 1 c1 from t1000 where c1 > 0' /* 08 */ while @i<@num_of_ors /* 09 */ begin /* 10 */ set @i = @i + 1 /* 11 */ set @sql_stmt = @sql_stmt + /* 12 */ ' or (c' /* 13 */ + /* 14 */ cast /* 15 */ ( /* 16 */ @i%5+4 /* 17 */ as varchar(10) /* 18 */ ) /* 19 */ + /* 20 */ ' = ' /* 21 */ + /* 22 */ cast /* 23 */ ( /* 24 */ @i as varchar(10) /* 25 */ ) /* 26 */ + /* 27 */ ')' /* 28 */ end /* 29 */ set nocount off /* 30 */ execute spWriteStringToFile @sql_stmt, 'c:\temp\', 'query.sql' /* 31 */ print 'Done.' /* 32 */ go |

Here is an explanation of the code:

- Lines 01 to 06 are initiating variables, line 4 holds the number of "OR"s that the where clause will include.

- Line 07 is the beginning of the SQL statement that is going to be produced.

- Lines 08 - 28 holds a while loop that creates the where clause. Line 30 is using the previously created stored procedure (spWriteStringToFile) to write the query to a file.

For each file I will also add the code which will use the correct database, the dbcc commands to flush the cache and SET STATISTICS to show the IO, CPU and execution plan of the query:

| use total_long_text_queries go dbcc dropcleanbuffers dbcc freeproccache go SET STATISTICS IO ON go SET STATISTICS TIME ON go SET STATISTICS PROFILE ON go |

Running the query above with the @num_of_ors set to 5000, 10000, 15000 and 20000 produces the following files:

- query05000.txt with the size of 145KB.

- query10000.txt with the size of 292KB.

- query15000.txt with the size of 448KB.

- query20000.txt with the size of 604KB.

Running The Queries

As I mentioned earlier loading such long queries can be a bit heavy for Management Studio. So during my testing I ran the queries with the Sqlcmd Utility (new and much enhanced version of osql) which is perfect for running queries entered at the command line or from a parameter file.

Running the test queries with sqlcmd utility is very easy. For testing purposes I used the command below to connect to the SQL Server instance, read from an input file and write output to an file.

| sqlcmd -S <server>\<instance> -i c:\temp\query<XX>000.txt -o c:\temp\queryresults<XX>000.txt |

Analyzing the Results

Now that we have run the queries from above, lets take a look at the result:

Based on the analysis, I would like to bring attention on two specific portions of the results:

- First, review the section outlining how much I/O was performed by the query

- As an example in the queryresults20000.txt file:

- Table 't1000'. Scan count 1, logical reads 3, physical reads 2, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0

- As an example in the queryresults20000.txt file:

- Second, review the section outlining how much time was wasted on parsing the query

- As an example in the queryresults20000.txt file:

- SQL Server parse and compile time: CPU time = 83829 ms, elapsed time = 83893 ms

- As an example in the queryresults20000.txt file:

These results show the following trends:

- Running the query required almost no I/O resources.

- Most of the time was "parse and compile time".

- "parse and compile time" is mostly composed of CPU time.

- The CPU time consumed raises as the query's text gets longer.

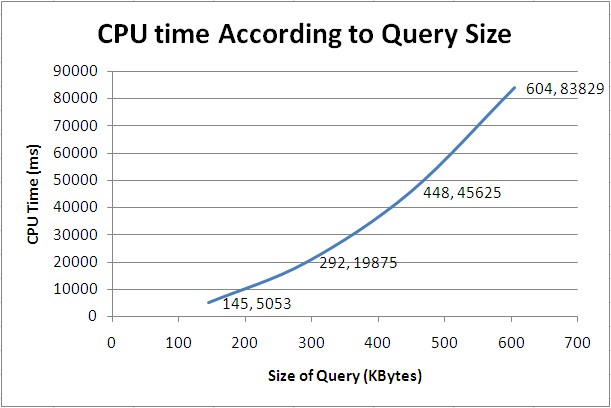

I have summarized the results into this Excel file; though the bottom line is included in the following table and graph:

| Size of Query (KB) | CPU Time (ms) | Elapsed Time (ms) |

| 145 | 5053 | 5053 |

| 292 | 19875 | 19944 |

| 448 | 45625 | 45657 |

| 604 | 83829 | 83893 |

The graphic below outlines the size of the query and the CPU time in milliseconds for the three tests conducted separated by a comma. Based on this information, I believe we have found out that lengthy SQL Server queries will consume your CPU.

Next Steps

- When designing a DAL (Data Access Layer) or using one in your applications, you should take the following items into consideration:

- Long SQL statements require a large amount of CPU to processes regardless of the actual record amount being fetched.

- DAL architecture should address functionality challenges in addition to performance challenges including the length of the SQL query statement created. A good rule of thumb can be to make sure that all queries larger than 50KB are thoroughly tested to make sure they are not creating a performance issue.

- DAL implementers might want to consider including a fuse for limiting the size of queries.

- You might want to get more familiar with sqlcmd utility as this can be a key tool for testing, maintenance and general usage.

About the author

Tal Olier is a database expert currently working for HP holding various positions in IT and R&D departments.

Tal Olier is a database expert currently working for HP holding various positions in IT and R&D departments.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2008-05-16